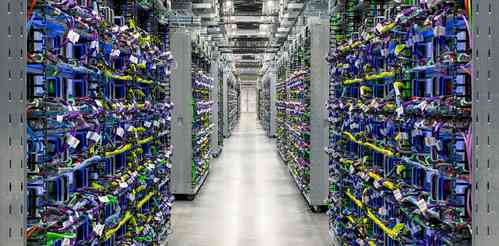

Google expands cost-effective AI-optimised infrastructure portfolio for customers

Google on Wednesday expanded its artificial intelligence (AI)-optimised infrastructure portfolio that is both cost-effective and scalable for its Cloud customers.

New Delhi, Aug 30 (IANS) Google on Wednesday expanded its artificial intelligence (AI)-optimised infrastructure portfolio that is both cost-effective and scalable for its Cloud customers.

The company is expanding its AI-optimised infrastructure portfolio with 'Cloud TPU v5e', the most cost-efficient, versatile, and scalable Cloud TPU to date, which is also now available in preview.

"Cloud TPU v5e is purpose-built to bring the cost-efficiency and performance required for medium- and large-scale training and inference. TPU v5e delivers up to 2x higher training performance per dollar and up to 2.5x inference performance per dollar for LLMs and gen AI models compared to Cloud TPU v4," Google said in a blogpost.

According to the company, TPU v5e is also incredibly versatile, with support for eight different virtual machine (VM) configurations, ranging from one chip to more than 250 chips within a single slice, allowing customers to choose the right configurations to serve a wide range of LLM and gen AI model sizes.

Cloud TPU v5e also provides built-in support for leading AI frameworks such as JAX, PyTorch, and TensorFlow, along with popular open-source tools like Hugging Face’s Transformers and Accelerate, PyTorch Lightning, and Ray.

Moreover, the tech giant announced that its A3 VMs, based on Nvidia H100 GPUs, delivered as a GPU Supercomputer, will be generally available next month to power customers large-scale AI models.

"Today, we’re thrilled to announce that A3 VMs will be generally available next month. Powered by Nvidia’s H100 Tensor Core GPUs, which feature the Transformer Engine to address trillion-parameter models, Nvidia’s H100 GPU, A3 VMs are purpose-built to train and serve especially demanding gen AI workloads and LLMs," Google said.

The A3 VM features dual next-generation 4th Gen Intel Xeon scalable processors, eight Nvidia H100 GPUs per VM, and 2TB of host memory.

Built on the latest Nvidia HGX H100 platform, the A3 VM delivers 3.6 TB/s bisectional bandwidth between the eight GPUs via fourth-generation Nvidia NVLink technology.

IANS

IANS